Remember that website you spent weeks designing, filled with amazing content, but somehow, it’s invisible in Google search results? That’s where technical SEO comes in—the unsung hero behind successful websites.

While creating great content is crucial, the technical foundation of your website determines whether search engines can find, understand, and properly rank your pages.

Think of technical SEO as the engine of your car—you might have the most beautiful vehicle in the world, but without a properly functioning engine, you’re not going anywhere.

In this guide, I’ll walk you through a comprehensive technical SEO checklist to ensure your website doesn’t just look good but performs well in search results too.

What is Technical SEO?

Technical SEO refers to the process of optimizing the infrastructure of your website to help search engines crawl and index your content more effectively. Unlike content-focused SEO strategies that deal with keywords and engaging writing, technical SEO addresses the “behind the scenes” aspects that many site owners overlook.

At its core, technical SEO focuses on improving site infrastructure in areas like:

- How easily search engines can discover and understand your content

- How quickly your pages load for users

- How well your site functions across different devices

- How securely your site handles user information

Why does this matter? Because Google and other search engines want to deliver the best possible experience to their users. If your site is slow, confusing to navigate, or difficult to access on mobile devices, search engines will likely rank your competitors higher—even if your content is superior.

Now, let’s dive into our technical SEO checklist to help you build a solid foundation for your website’s success.

15 Technical SEO Checklist Items For 2025

Here is the ultimate checklist of Technical SEO that you can implement on your website for better optimization.

1. Ensure Your Website Is Crawlable And Indexable

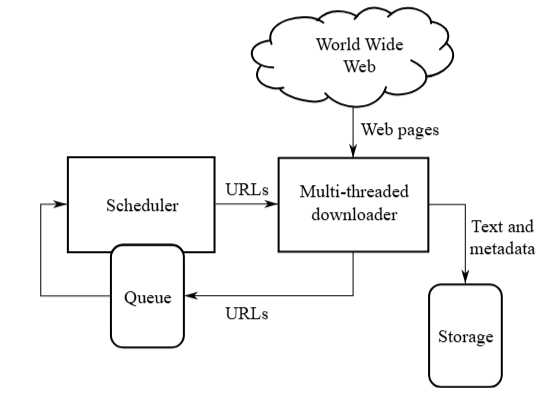

For your content to appear in search results, search engines must first be able to discover and understand it. This process begins with crawling (navigating through your website) and then indexing (adding your pages to a search engine’s database).

How to check:

- Use Google Search Console to see which pages have been indexed

- Enter “site.com” in Google search to see which pages appear in the index

- Check that your robots.txt file isn’t accidentally blocking important content

Common issues to fix:

- “Noindex” tags accidentally applied to important pages

- Critical content blocked by robots.txt

- Login barriers preventing crawlers from accessing content

Real-world example: A small business website was wondering why their new product pages weren’t appearing in search results. Upon investigation, they discovered that a developer had added “noindex” meta tags during development to prevent the pages from appearing in search results while they were being built—but forgot to remove them when the pages went live. After removing these tags, the pages were indexed within days.

2. Check For Proper Use of Robots.txt

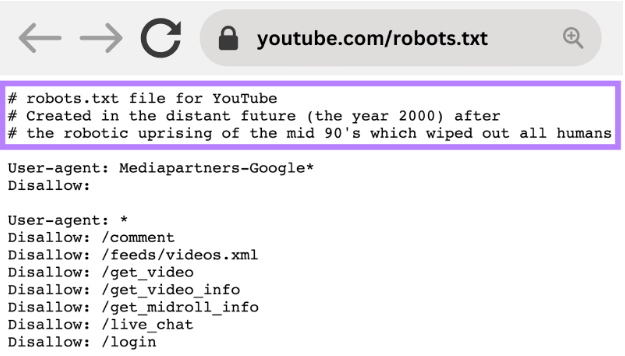

Your robots.txt file acts as a set of instructions for search engine crawlers, telling them which parts of your site they can access. Using it incorrectly can prevent your content from being indexed.

How to implement:

- Create a robots.txt file in your root directory (e.g., www.yoursite.com/robots.txt)

- Use it to block access to admin pages, duplicate content, or private areas

- Never use it to hide pages you want to be ranked (use noindex meta tags instead)

Pro tip: Test your robots.txt file with Google Search Console’s robots.txt Tester tool before implementing changes to ensure you’re not accidentally blocking important content.

3. Use a Clean and Optimized URL Structure

URLs are not just addresses—they’re opportunities to tell both users and search engines what your page is about.

Best practices:

- Keep URLs short and descriptive

- Include relevant keywords (but don’t stuff)

- Use hyphens to separate words (not underscores)

- Stick to lowercase letters

- Avoid parameters when possible (like ?id=123)

Examples:

- Good: yoursite.com/blue-running-shoes

- Poor: yoursite.com/p/24?category_id=56&product=blue-shoe

Creating clean URLs makes your content more shareable, easier to understand at a glance, and generally more appealing to click. It also helps search engines understand what your page is about before they even crawl the content.

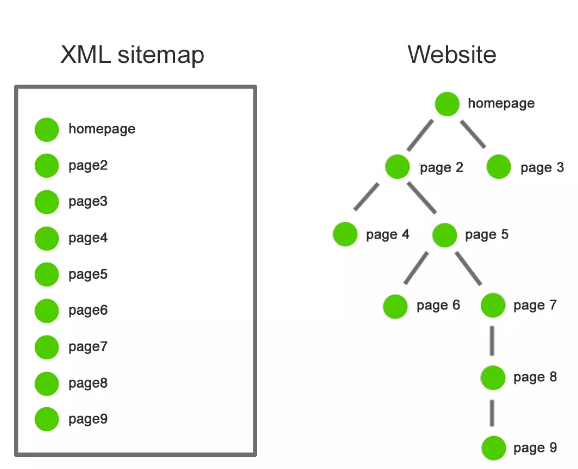

4. Implement an XML Sitemap and Submit to Search Engines

An XML sitemap serves as a roadmap of your website, helping search engines discover all your important pages.

How to create and submit:

- Use plugins like Yoast SEO (WordPress) or dedicated sitemap generators

- Include all important pages but exclude thin content or duplicates

- Submit your sitemap to Google Search Console and Bing Webmaster Tools

- Update your sitemap when you add or remove significant content

A good XML sitemap includes not just URLs but also information about when pages were last updated and how frequently they change. This helps search engines prioritize crawling.

Pro tip: For larger sites, consider creating separate sitemaps for different content types (blog posts, product pages, etc.) and then a sitemap index file that references them all.

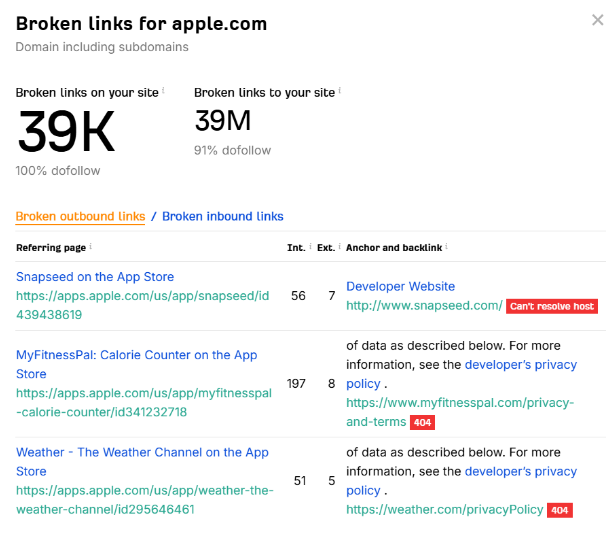

5. Fix Broken Internal and External Links

Broken links create dead ends for both users and search crawlers, wasting your crawl budget and creating poor user experiences.

How to identify:

- Use tools like Screaming Frog, Ahrefs, or the CognitiveSEO Site Audit tool

- Check Google Search Console’s “Coverage” report for 404 errors

- Look for signs of “link rot” in older content

How to fix:

- Update internal links to point to current pages

- Set up 301 redirects for changed URLs

- Replace or remove external links that no longer work

One marketing agency discovered that their 2-year-old blog posts contained links to studies and resources that no longer existed. By updating these links to point to current research, they improved user experience and saw an 18% increase in time spent on these pages.

6. Ensure Your Site is Mobile-Friendly

With Google’s mobile-first indexing, how your site performs on mobile devices directly impacts your rankings.

Key areas to optimize:

- Responsive design that adapts to all screen sizes

- Readable text without zooming

- Adequate spacing for touch elements

- No horizontal scrolling required

- Fast loading on mobile networks

How to test: Use Google’s Mobile-Friendly Test tool and review actual pages on various devices. Pay special attention to navigation, forms, and interactive elements.

Mobile optimization is no longer optional—it’s a fundamental requirement. Sites that provide poor mobile experiences will struggle to rank well, regardless of their content quality.

7. Use HTTPS Across Your Entire Site

HTTPS encryption isn’t just for e-commerce—it’s a ranking factor and trust signal for all websites.

Implementation steps:

- Purchase an SSL certificate (many hosts offer these for free)

- Install the certificate on your server

- Set up proper 301 redirects from HTTP to HTTPS

- Update internal links to use HTTPS

- Check for mixed content warnings

Why it matters: Beyond the small ranking boost, secure connections protect user data and build trust. Users are increasingly wary of sites without the padlock icon in their browser bar.

A local business website saw a 9% increase in contact form submissions simply by switching to HTTPS, likely because visitors felt more comfortable sharing their information on a secure site.

8. Improve Site Speed and Core Web Vitals

Site speed and performance metrics directly impact both rankings and user experience. Google’s Core Web Vitals are specific measurements of page experience that have become official ranking factors.

Key speed factors to address:

- Image optimization (size and format)

- Efficient code and script loading

- Server response time

- Browser caching

- Content Delivery Networks (CDNs)

Core Web Vitals to monitor:

- Largest Contentful Paint (LCP): Main content load time (aim for under 2.5 seconds)

- Interaction to Next Paint (INP): Responsiveness to user interactions (aim for under 200ms)

- Cumulative Layout Shift (CLS): Visual stability (aim for under 0.1)

How to measure: Use Google PageSpeed Insights, Lighthouse, or the Core Web Vitals report in Google Search Console.

A photography portfolio site reduced their LCP from 4.2 seconds to 1.8 seconds by properly sizing and compressing images, implementing lazy loading, and upgrading their hosting. This improvement corresponded with a 26% increase in organic traffic over the following quarter.

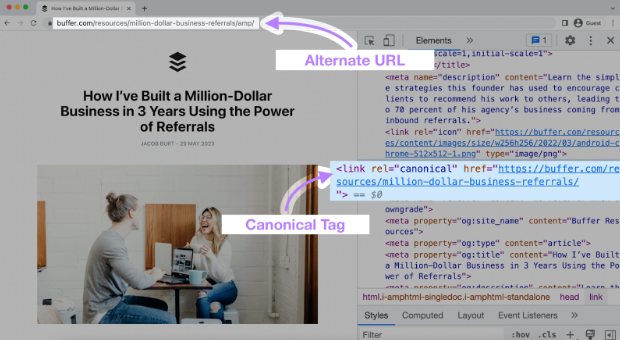

9. Use Canonical Tags to Prevent Duplicate Content

Duplicate content dilutes your ranking potential and confuses search engines about which version of a page to show.

When to use canonical tags:

- Product pages with multiple URLs (due to filters, sorting options, etc.)

- Content syndicated from other sources

- Mobile/desktop variations of the same content

- Printer-friendly versions of pages

Pro tip: Always use absolute URLs (including the https://) in canonical tags, not relative URLs, to avoid confusion.

10. Implement Structured Data (Schema Markup)

Structured data helps search engines understand your content better and can lead to rich results in search pages.

Common schema types to implement:

- Organization

- Local Business

- Product

- Article

- FAQ

- Recipe

- Event

- Review

Benefits:

- Enhanced search listings with additional information

- Better chance of appearing in featured snippets

- Improved relevance signals for your content

Implementation tools:

- Google’s Structured Data Markup Helper

- Schema.org references

- Testing with Google’s Rich Results Test

A recipe blog implemented Recipe schema markup across their content and saw a 38% increase in click-through rate from search results, largely because their listings now displayed cooking time, ratings, and calorie information directly in the search results.

11. Check For Redirect Chains And Loops

Redirect chains occur when one redirect points to another redirect, creating a sequence that slows down both users and crawlers.

Problems with redirect chains:

- Each redirect adds loading time

- Link equity (ranking power) diminishes with each hop

- Crawl budget is wasted following chains

How to fix:

- Identify chains using tools like Screaming Frog or Sitebulb

- Update all redirects to point directly to the final destination

- Watch for redirect loops (A→B→C→A) which can make pages inaccessible

An e-commerce site that had gone through multiple redesigns discovered their product pages were often behind 3-4 redirects. By cleaning these up to point directly to the current URLs, they improved load times and saw several pages improve in rankings.

12. Optimize Your Site Architecture For Crawl Depth

Pages buried deep within your site structure are less likely to be discovered and indexed by search engines.

Best practices:

- Keep important pages within 3 clicks of your homepage

- Use a logical hierarchical structure

- Implement breadcrumb navigation

- Include internal linking between related content

- Create category/hub pages that link to related content

Visualization tip: Use a tool like Screaming Frog to create a visual map of your site structure, helping you identify pages that are too deep in your hierarchy.

13. Ensure Consistent Use Of Trailing Slashes And Lowercase URLs

Consistency in your URL format prevents duplicate content issues and maximizes your crawl budget.

Choose one approach and stick with it:

- Either always use trailing slashes (example.com/page/) or never use them (example.com/page)

- Either always use lowercase URLs or maintain consistent capitalization

Implementation:

- Configure your server to redirect all variations to your preferred format

- Check all internal links to ensure they use the preferred format

- Update any canonical tags to reflect your choice

This may seem like a minor detail, but to search engines, example.com/page and example.com/page/ can be treated as separate URLs with the same content—essentially creating a duplicate content problem you didn’t know you had.

14. Use Hreflang Tags For Multilingual Sites

If your site targets users in different countries or languages, hreflang tags tell search engines which version to show to which users.

Common mistakes to avoid:

- Missing self-referencing hreflang tags

- Inconsistent use across different pages

- Incorrect language/region codes

- Broken implementation in your XML sitemap

A global e-commerce site with versions for the US, UK, and EU markets implemented proper hreflang tags and saw a 22% increase in their international organic traffic as search engines began showing the appropriate versions to users in different regions.

15. Monitor And Fix Crawl Errors In Google Search Console

Regular monitoring of crawl errors helps you catch and fix issues before they seriously impact your rankings.

Key reports to monitor:

- Coverage report for indexing issues

- Mobile Usability for smartphone-specific problems

- Links report for internal and external link issues

- Core Web Vitals report for performance problems

Maintenance routine:

- Set up a monthly technical SEO check

- Prioritize errors affecting important pages

- Document fixes and track progress over time

- Monitor server logs for crawler behavior

A media website made checking Google Search Console part of their weekly routine and quickly caught an accidental robots.txt change that was blocking crawlers from their news section. Because they caught it within days rather than weeks, they minimized the potential SEO damage.

More Related Reads:

- Best SEO Software For Agencies

- Top SEO Experts & Consultants in the UK

- Best Digital Marketing Tools For Agencies

Conclusion: 15 Must-have Technical SEO Checklist for Digital Marketers

Technical SEO might seem overwhelming at first glance, but breaking it down into these manageable checklist items makes the process much more approachable. Remember that technical SEO isn’t a one-time task—it’s an ongoing process of monitoring, testing, and improvement.

As search engines continue to evolve, keeping your technical foundation solid will ensure that your content has the best possible chance to rank well. Whether you’re a small business owner managing your own site or a marketing professional overseeing larger websites, this checklist gives you a framework for success.

Start with the most critical issues first—typically those related to crawling, indexing, and major user experience factors like mobile-friendliness and site speed. Then work through the remaining items methodically, documenting your changes and improvements along the way.

Remember that even small technical improvements can yield significant results over time. The most successful websites aren’t just those with the best content—they’re the ones that make it easiest for both users and search engines to access and understand that content.

By implementing this technical SEO checklist, you’re laying the groundwork for sustainable organic growth, better user experiences, and a website that stands the test of time—even as search algorithms continue to evolve.